The Microsoft world offers BCP, .NET, and SSIS for moving data. BCP is fast and efficient, but is limited. Bespoke .NET applications can be written to exact requirements, but can be rigid. SSIS is intended to bridge the gap, but can be overkill for simple applications and a bit of a challenge to deploy (or maybe it’s just me).

Because of the lack of a “Goldilocks” solution, I decided to experiment with PowerShell as an ETL scripting language. PowerShell integrates with .NET and offers language constructs found in modern programming environments. Because it’s not compiled, it does not require an extensive toolchain to develop and release.

The overall solution consists of

- Compiled, reusable Extraction and Loading Components using the .NET Framework

- A PowerShell Driver script

The intent is that the user customizes the driver script to use .NET components to move data. The .NET components are intended to be generic so that they do not need to be maintained for each application / use case. The PowerShell driver script orchestrates the data flow and optionally transforms the data in the process.

The source code and binaries are available from the DataVenture.io reposository on GitHub at https://github.com/dataventure-io/etl-tool.

.NET Components

The heavy lifting steps are implemented using the .NET Framework. The .NET components are implemented in a single library, called etl.lib.dll. The library implements extractor and loader classes. The extractor and loader classes are instantiated and called from PowerShell.

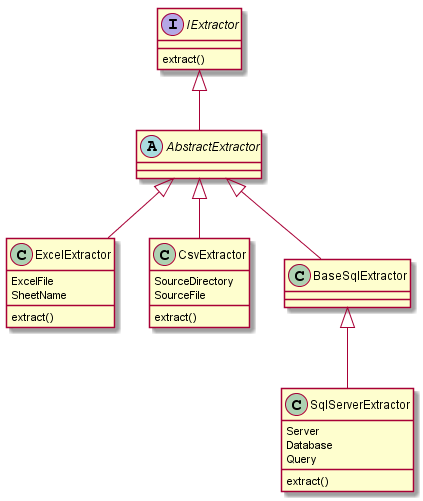

Extractor Classes

There are three concrete extractor classes: ExcelExtractor, CsvExtractor, and SqlServerExtractor. Each class implements an extract() method that returns a DataTable.

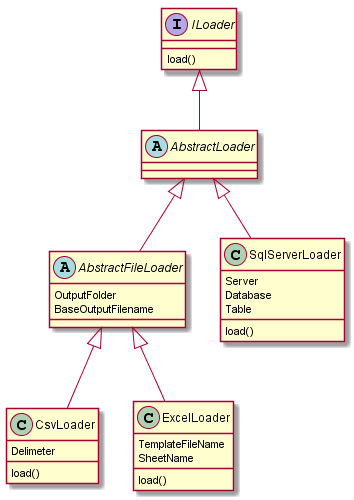

Loader Classes

As with the extractor hierarchy, there are three concrete loader classes: ExcelLoader, CsvLoader, and SqlServerLoader. Each implements a load() method which accepts a DataTable as a parameter. The DataTable is sent to the Loader’s target. In the case of the SqlServerLoader, the class uses the SQL Server Bulk Insert API.

Driver Script Operation

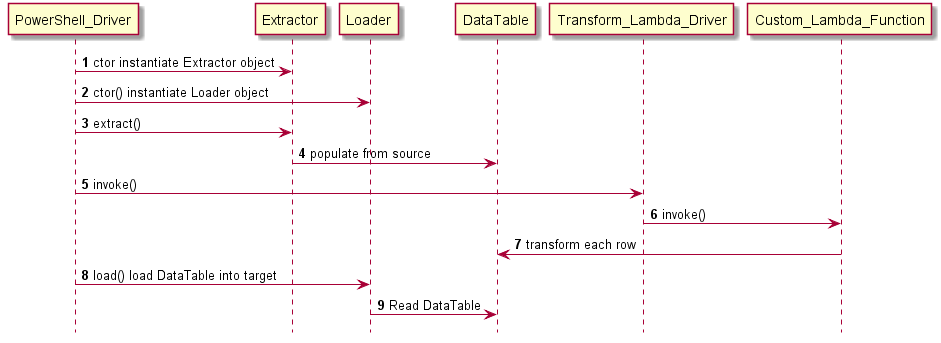

The ETL script operates by

- The PowerShell driver instantiates an Extractor class

- The PowerShell driver instantiates a Loader class

- The PowerShell driver invokes the extract() method on the Extractor class

- The Extractor reads the data source and fills a DataTable

- The PowerShell driver invokes the generic transformation lambda definition, passing the custom function expression and data table as parameters

- The generic lambda driver invokes the custom function for each element in the DataTable

- The custom function transforms each row

- The PowerShell driver invokes the load() method on the Loader class

- The Loader class reads the DataTable and writes the data to the target

Transformation Lambda Function

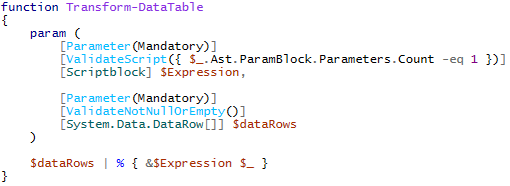

Generic Lambda Definition

The generic lambda function in this script is taken from the article https://www.powershellmagazine.com/2013/12/23/simplifying-data-manipulation-in-powershell-with-lambda-functions.

The function defintion specifies a script block ($Expression) and a list ($dataRows). The function evaluates the code in $Expression and calls it for each element in $dataRows.

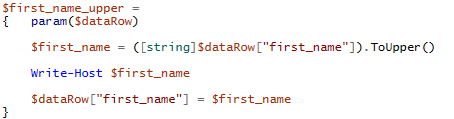

Custom Lamda Function

The custom lamda callback function is crazy simple in this example. It receives a single data row as a parameter. From within the callback scope, the function manipulates data within the data row and has access to global variables defined elsewhere in the script.

The custom lambda function is script assigned to a variable. The script is evaluated and executed at runtime.

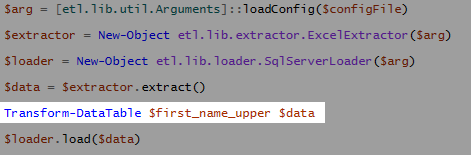

Calling the Lambda Function

Calling the lambda function is a matter of calling the generic lambda definition, passing the runtime callback expression ($first_name_upper) and the data table ($data).

Sample Driver Script

Below is a sample script that loads Excel data into SQL Server. It implements a simple lambda function to convert the first name to uppercase.

<# Sample PowerShell driver script etl.ps1

Usage: etl.ps1 <config_file.json>

#>

param

(

[string]$configFile

)

# generic lambda definition to transform data

function Transform-DataTable

{

param (

[Parameter(Mandatory)]

[ValidateScript({ $_.Ast.ParamBlock.Parameters.Count -eq 1 })]

[Scriptblock] $Expression,

[Parameter(Mandatory)]

[ValidateNotNullOrEmpty()]

[System.Data.DataRow[]] $dataRows

)

$dataRows | % { &$Expression $_ }

}

# sample lambda callback function to convert the first_name column to uppercase

$first_name_upper =

{ param($dataRow)

$first_name = ([string]$dataRow["first_name"]).ToUpper()

Write-Host $first_name

$dataRow["first_name"] = $first_name

}

# load dependencies

[System.Reflection.Assembly]::LoadFile("$($env:ETL_TOOL_HOME)\bin\Newtonsoft.Json.dll")

[System.Reflection.Assembly]::LoadFile("$($env:ETL_TOOL_HOME)\bin\Antlr4.Runtime.dll")

[System.Reflection.Assembly]::LoadFile("$($env:ETL_TOOL_HOME)\bin\etl.lib.dll")

# load the runtime arguments from the json configuration file.

$arg = [etl.lib.util.Arguments]::loadConfig($configFile)

# instantiate an ExcelExtractor object

$extractor = New-Object etl.lib.extractor.ExcelExtractor($arg)

# instantiate a SQLServerLoader object

$loader = New-Object etl.lib.loader.SqlServerLoader($arg)

# extract the data from an Excel sheet

$data = $extractor.extract()

# transform the data using the lambda function

Transform-DataTable $first_name_upper $data

#load the data into SQL Server

$loader.load($data)

Conclusion

PowerShell looks promising as a contender in the ETL space. It’s simple to reuse and can likely adapt to more complex applications. In addition to the ETL-centric functionality introduced here, PowerShell is geared toward automation, with support for file manipulation, system services, logging, email, and other features useful in data flow applications.

One issue with the current approach is that if one were to use multiple transformation lamdas, the driver function will iterate over the entire dataset for each transformation. A next step in the design will be to register multiple PowerShell callbacks to a .NET event to improve the efficency.

The PowerShell platform is becoming more capable. PowerShell 7 has introduced parallel pipelines, which allows for concurrent execution. Microsoft has shifted PowerShell direction to exclusively use .NET Core, which is a cross-platform .NET implementation. Unfortunately for the near term, the shift to .NET Core means that some libraries, such as the SQL Server Bulk Load API, are not yet available. But as more libraries become available, solutions will work on all .NET Core platforms, including Windows, MacOS, and Linux.